Day 22: Low-Latency Consumer Optimization

Building StreamSocial’s Lightning-Fast Notification Engine

What We’re Building Today

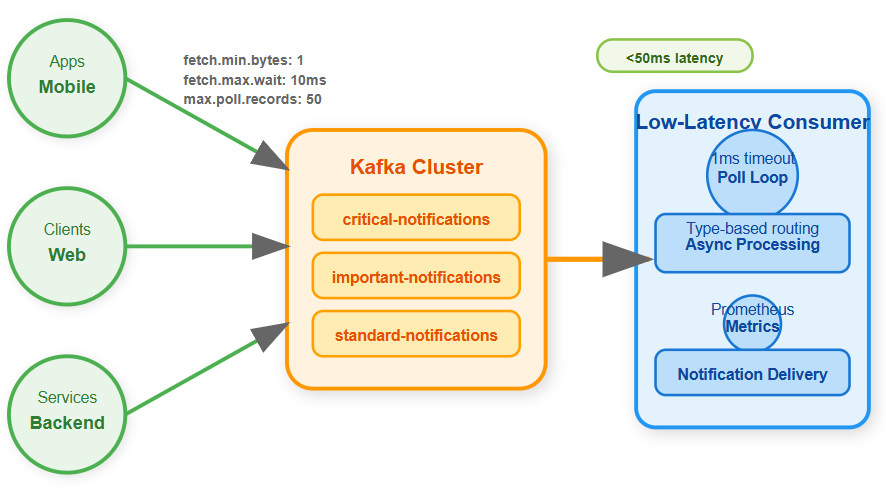

Today you’ll build a Kafka consumer that delivers notifications faster than you can blink. We’re talking about processing messages in under 50 milliseconds - that’s half the time it takes to snap your fingers. Here’s what we’ll cover:

Optimize Kafka consumers for ultra-fast message processing

Configure poll timeouts and fetch settings for minimal latency

Build StreamSocial’s real-time notification delivery pipeline

Create a monitoring dashboard to track every millisecond

Understanding the Speed Challenge

Think about Instagram or Twitter. When someone likes your post, you get a notification almost instantly. That’s not magic - it’s a carefully optimized system that processes millions of events every hour while keeping latency under 100ms.

StreamSocial needs the same performance. Our users expect instant notifications for messages, likes, and trending content. Default Kafka settings prioritize throughput (handling lots of messages) over latency (processing each message quickly). Today, we flip that equation.

The Three Performance Levers

Poll Timeout: Your Response Time

The poll(duration) method tells Kafka how long to wait for new messages. Think of it like checking your phone:

Short timeout (1-10ms): You check constantly, see messages immediately, but use more battery (CPU)

Long timeout (500ms+): You check occasionally, messages pile up, but save battery

For notifications, we want near-instant responses, so we’ll use 1ms timeouts.

Fetch Size: How Much to Grab

These settings control how Kafka batches messages:

fetch.min.bytes: Minimum data before returning (we’ll use 1 byte - don’t wait!)fetch.max.wait.ms: Maximum wait time for batching (we’ll use 10ms - barely any wait!)

Smaller fetches mean lower latency but more network calls. For notifications, speed wins.

Processing Loop: The Engine

Your processing loop determines how fast you handle each message:

Single-threaded: Simple, predictable

Multi-threaded: Parallel processing

Async: Non-blocking I/O for downstream services

We’ll use async processing to prevent slow operations from blocking other messages.